Putting Things Into Words

Much has been made of the loss of jobs at the hands of technology, especially AI. This makes a ton of sense: it’s scary as hell to lose your primary (or only) source of income, and it can be really hard to find another job.

Layoffs are no stranger to the corporate world, but when you hear a series of them paired with headlines about how AI is replacing workers, you start putting two and two together.

The current consensus is that while jobs are being erased from existence, new roles are cropping up even faster. The problem is that since all the cards are being thrown up into the air, there’s no guarantee they’ll be put back in the same order. In fact, there’s likely to be tons of disruptions and plenty of unemployment even while there are lots of job openings.

That’s where the skill gap comes into play. Classically, you might address this by attending a community college to pick up a new skill, or maybe by jumping into an entry-level position at an entirely new line of work. The problem with entry-level is that the pay and benefits might not match up, though.

So: what do you do?

I see lots of articles, videos, and various other outlets suggesting potential solutions, and many of them ring true to me. The three most common recommendations are to learn how to use AI tools (duh), focusing on creative or artistic (“human”) endeavors or finding other physical jobs that are hard to replicate, and becoming the type of person who enjoys learning new skills all the time.

These are fantastic suggestions, full stop. I’m not here to be critical of anyone who suggests practicing with AI every day; after all, a huge part of my research process—and the reason I can find drunken octopuses so quickly—is fast, effective search with a large language model or two.

Likewise, doing a job that seems unlikely to be replaced by AI? That’s solid advice. There is the issue that you might be wrong, of course—there are surprises every day in how these things develop, but the strategy is sound.

Constantly learning new skills might be the best strategy out of the three. Here, you’re simply expanding your base of options. This is always great advice, as long as you can afford to experiment. You just need bandwidth.

I want to offer a fourth recommendation that I haven’t seen anywhere else, although I’m sure it’s out there, because it’s fairly obvious to me. I bet it’s obvious to other people as well.

The advice? Learn how to put things into words.

Why? Because we are now in an era where you can make magic happen, but only if you know how to cast the spell.

If you want something, you can have it; but only if you know how to ask for it.

That’s not literally true of everything across the board, of course—but it’s true for more things every day. If you ask AI to give you a dragon, you can get a dragon:

Not bad, right?

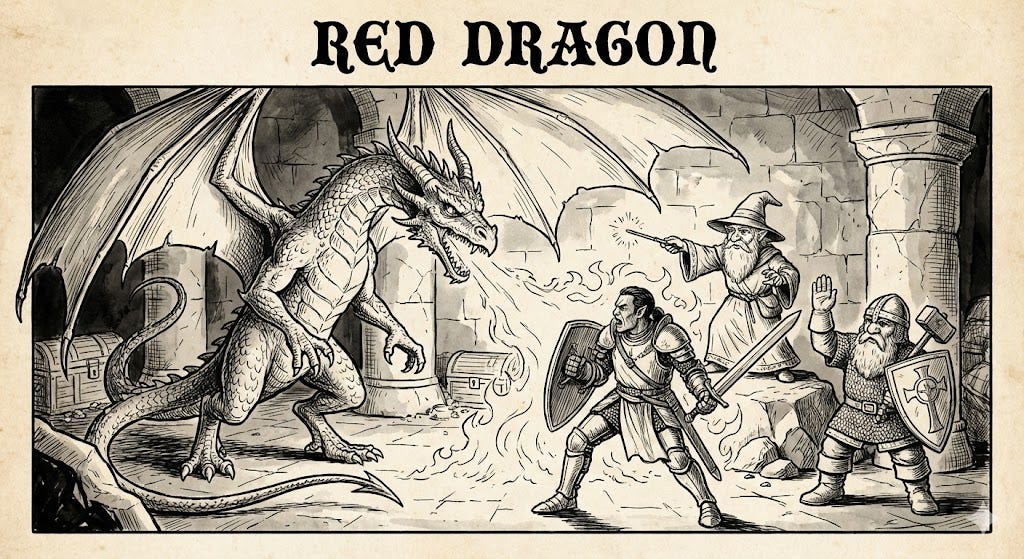

However, you can also ask AI to give you “a red dragon from AD&D’s first edition, consistent with the drawings inside Monster Manual I, battling a half-orc paladin, a gnomish wizard, and a dwarvish cleric”:

The bottom image is closer to what I want to see. The skill you need to do this is to put the idea into words.

Preach!

I've been talking about learning to be deliberate in how you communicate with AI instead of "splatterprompting" for a while now. I'm also a fan of the iterative process: Ask for a dragon, see what AI returns by default, compare it to your vision, spot what's missing or different. Ask for a new dragon with those things corrected. Rinse, repeat.

But I think putting things into words is just as relevant, perhaps counterintuitively, when it comes to simply writing for an audience. Because yes, AI can spit out passable prose at scale in seconds, but having a unique voice is only possible when you're not an "average common denominator aggregator." I really think we'll see more people gravitating away from midstream AI slop towards people they can relate to.

So yes: Learn to describe shit for AI, but also for other humans.

Since it apparently has never occurred to anybody who dislikes AI to just find their nearest operation centre and destroy the machines with a baseball bat, or even just pull the plug on them, the most obvious concern is to figure out how to positively employ AI. Industrial change has seen both things happen.

The Luddites were weavers who were put out of business by mechanical looms, and they restored to destroying the machine until the British authorities put them out of business- this was several centuries ago, but the parallel is obvious. Likewise for the advent of "automation" in the 20th century- labor unions protested the process because it obviously put their members out of work, but their employers ignored and oppressed them for doing so. The unions, however, still retained some influence as a bargaining body for those who were left in their trades, something the Luddites never even considered that they could do.

They want to force us to use AI, but the most effective way is to ignore and bankrupt them so they will have to spend money on something other than AI for once- or, more reasonably, determine what AI can be used ethically and morally for in professions. Both things are happening now...