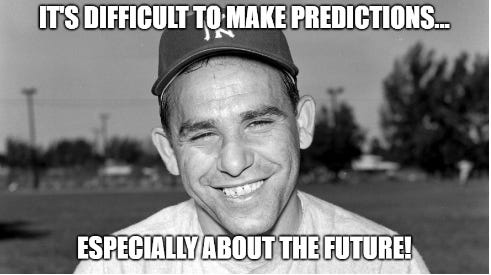

What will the next hundred years look like?

That’s probably something that no one person can answer. Fortunately for you, I’ve brought in a second person to help us think through this most difficult of questions, so we should be all set!

Please welcome

to Goatfury Writes for the day! Scoot writes for Gibberish, and together we have had a great time speculating about future technologies over on Notes.I’ll mention a few things, and then I’ll pass the mic over to him for a bit, and then I’ll try to draw some conclusions based on what we both think.

Here goes!

Keep reading with a 7-day free trial

Subscribe to Goatfury Writes to keep reading this post and get 7 days of free access to the full post archives.